PySpark Cheat Sheet

Source : github Pyspark-cheatsheet

This cheat sheet will help you learn PySpark and write PySpark apps faster. Everything in here is fully functional PySpark code you can run or adapt to your programs.

These snippets are licensed under the CC0 1.0 Universal License. That means you can freely copy and adapt these code snippets and you don't need to give attribution or include any notices.

These snippets use DataFrames loaded from various data sources:

- "Auto MPG Data Set" available from the UCI Machine Learning Repository.

- customer_spend.csv, a generated time series dataset.

- date_examples.csv, a generated dataset with various date and time formats.

- weblog.csv, a cleaned version of this web log dataset.

These snippets were tested against the Spark 3.2.2 API. This page was last updated 2022-09-19 15:31:03.

Make note of these helpful links:

- PySpark DataFrame Operations

- Built-in Spark SQL Functions

- MLlib Main Guide

- Structured Streaming Guide

- PySpark SQL Functions Source

Generate the Cheatsheet

You can generate the cheatsheet by running cheatsheet.py in your PySpark environment as follows:

- Install dependencies:

pip3 install -r requirements.txt - Generate README.md:

python3 cheatsheet.py - Generate cheatsheet.ipynb:

python3 cheatsheet.py --notebook

Table of contents

- Accessing Data Sources

- Load a DataFrame from CSV

- Load a DataFrame from a Tab Separated Value (TSV) file

- Save a DataFrame in CSV format

- Load a DataFrame from Parquet

- Save a DataFrame in Parquet format

- Load a DataFrame from JSON Lines (jsonl) Formatted Data

- Save a DataFrame into a Hive catalog table

- Load a Hive catalog table into a DataFrame

- Load a DataFrame from a SQL query

- Load a CSV file from Amazon S3

- Load a CSV file from Oracle Cloud Infrastructure (OCI) Object Storage

- Read an Oracle DB table into a DataFrame using a Wallet

- Write a DataFrame to an Oracle DB table using a Wallet

- Write a DataFrame to a Postgres table

- Read a Postgres table into a DataFrame

- Data Handling Options

- Provide the schema when loading a DataFrame from CSV

- Save a DataFrame to CSV, overwriting existing data

- Save a DataFrame to CSV with a header

- Save a DataFrame in a single CSV file

- Save DataFrame as a dynamic partitioned table

- Overwrite specific partitions

- Load a CSV file with a money column into a DataFrame

- DataFrame Operations

- Add a new column to a DataFrame

- Modify a DataFrame column

- Add a column with multiple conditions

- Add a constant column

- Concatenate columns

- Drop a column

- Change a column name

- Change multiple column names

- Change all column names at once

- Convert a DataFrame column to a Python list

- Convert a scalar query to a Python value

- Consume a DataFrame row-wise as Python dictionaries

- Select particular columns from a DataFrame

- Create an empty dataframe with a specified schema

- Create a constant dataframe

- Convert String to Double

- Convert String to Integer

- Get the size of a DataFrame

- Get a DataFrame's number of partitions

- Get data types of a DataFrame's columns

- Convert an RDD to Data Frame

- Print the contents of an RDD

- Print the contents of a DataFrame

- Process each row of a DataFrame

- DataFrame Map example

- DataFrame Flatmap example

- Create a custom UDF

- Transforming Data

- Sorting and Searching

- Filter a column using a condition

- Filter based on a specific column value

- Filter based on an IN list

- Filter based on a NOT IN list

- Filter values based on keys in another DataFrame

- Get Dataframe rows that match a substring

- Filter a Dataframe based on a custom substring search

- Filter based on a column's length

- Multiple filter conditions

- Sort DataFrame by a column

- Take the first N rows of a DataFrame

- Get distinct values of a column

- Remove duplicates

- Grouping

- count(*) on a particular column

- Group and sort

- Filter groups based on an aggregate value, equivalent to SQL HAVING clause

- Group by multiple columns

- Aggregate multiple columns

- Aggregate multiple columns with custom orderings

- Get the maximum of a column

- Sum a list of columns

- Sum a column

- Aggregate all numeric columns

- Count unique after grouping

- Count distinct values on all columns

- Group by then filter on the count

- Find the top N per row group (use N=1 for maximum)

- Group key/values into a list

- Compute a histogram

- Compute global percentiles

- Compute percentiles within a partition

- Compute percentiles after aggregating

- Filter rows with values below a target percentile

- Aggregate and rollup

- Aggregate and cube

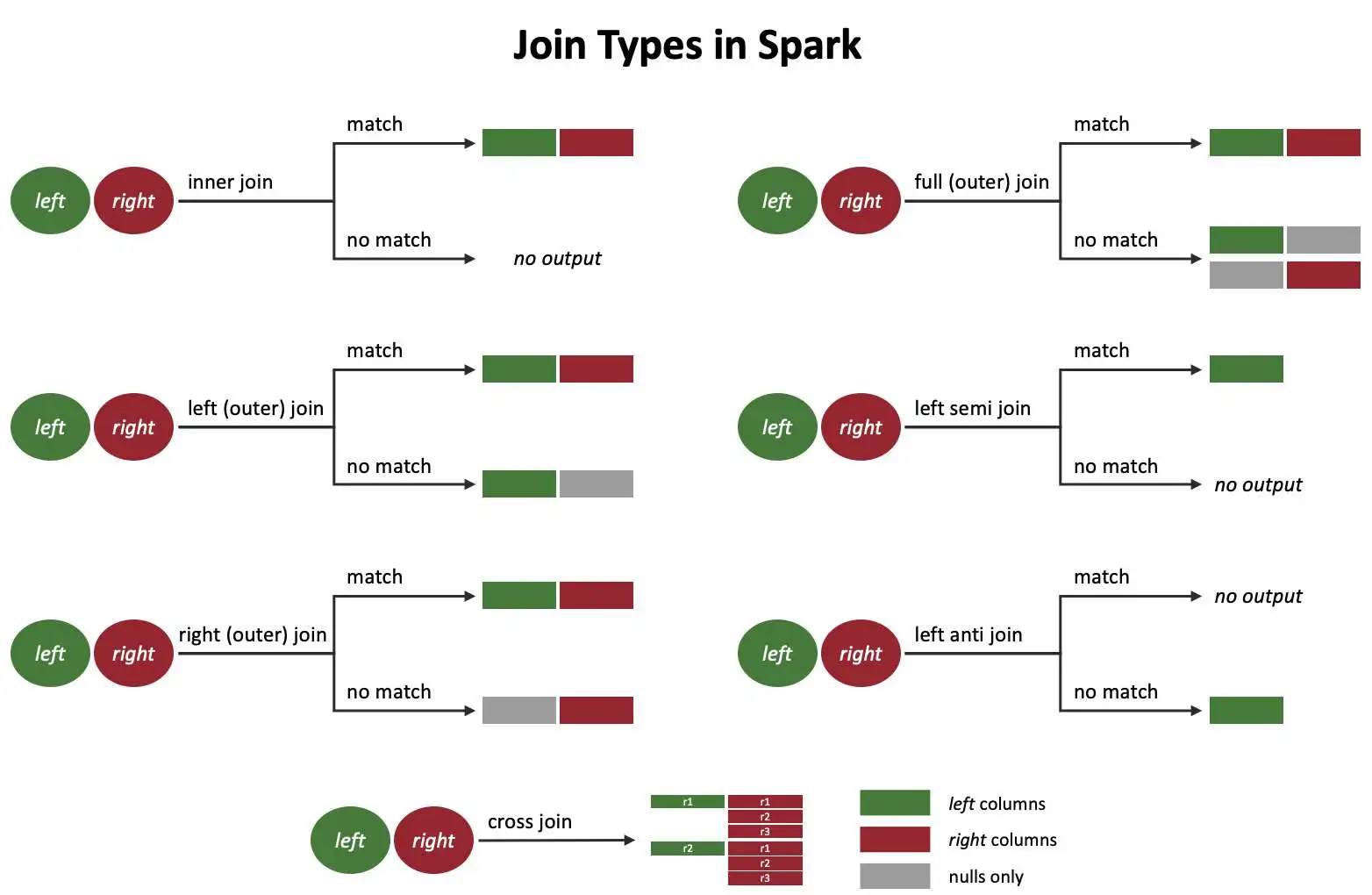

- Joining DataFrames

- File Processing

- Handling Missing Data

- Dealing with Dates

- Unstructured Analytics

- Pandas

- Convert Spark DataFrame to Pandas DataFrame

- Convert Pandas DataFrame to Spark DataFrame with Schema Detection

- Convert Pandas DataFrame to Spark DataFrame using a Custom Schema

- Convert N rows from a DataFrame to a Pandas DataFrame

- Grouped Aggregation with Pandas

- Use a Pandas Grouped Map Function via applyInPandas

- Data Profiling

- Data Management

- Save to a Delta Table

- Update records in a DataFrame using Delta Tables

- Merge into a Delta table

- Show Table Version History

- Load a Delta Table by Version ID (Time Travel Query)

- Load a Delta Table by Timestamp (Time Travel Query)

- Compact a Delta Table

- Add custom metadata to a Delta table write

- Read custom Delta table metadata

- Spark Streaming

- Connect to Kafka using SASL PLAIN authentication

- Create a windowed Structured Stream over input CSV files

- Create an unwindowed Structured Stream over input CSV files

- Add the current timestamp to a DataFrame

- Session analytics on a DataFrame

- Call a UDF only when a threshold is reached

- Streaming Machine Learning

- Control stream processing frequency

- Write a streaming DataFrame to a database

- Time Series

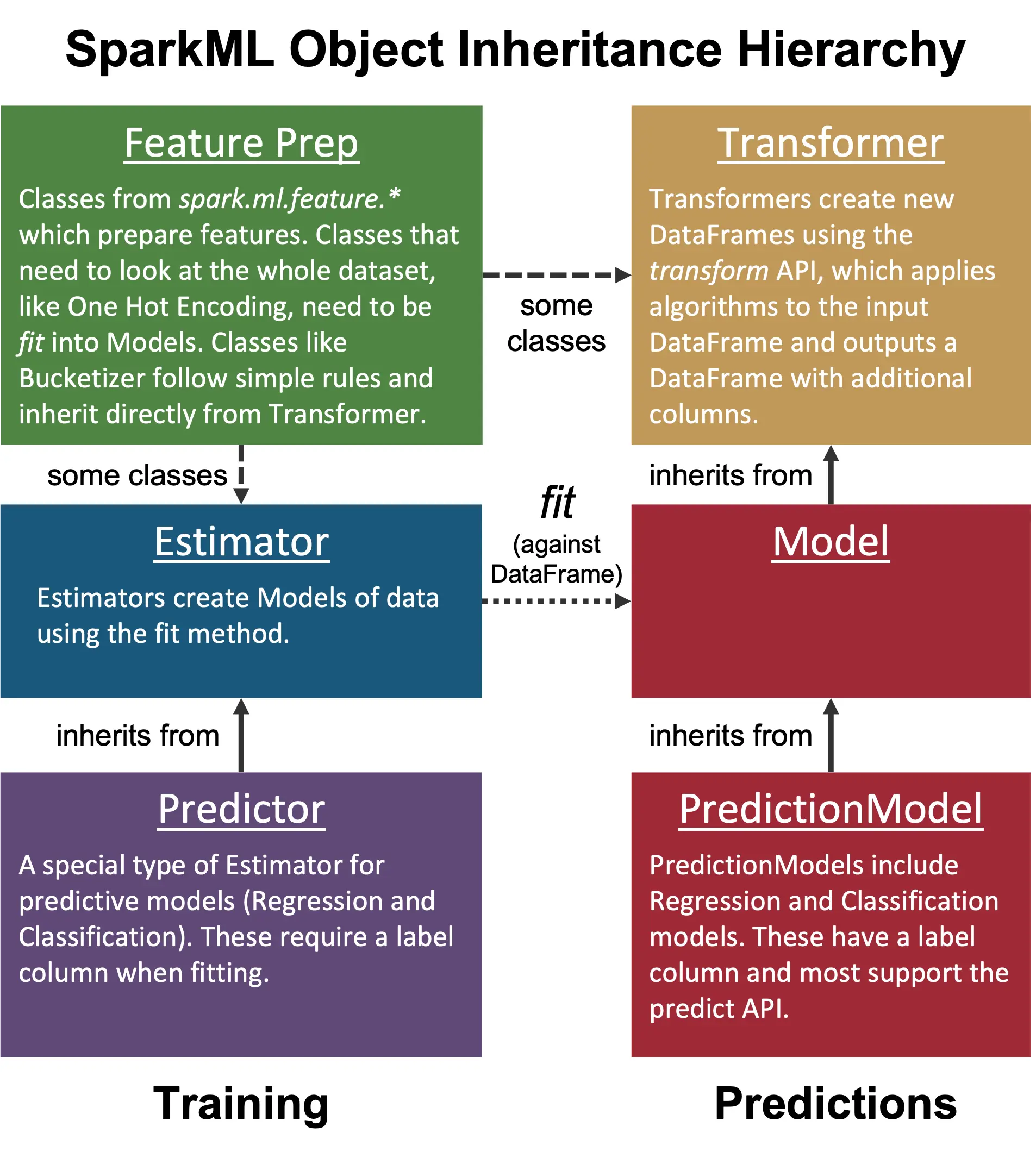

- Machine Learning

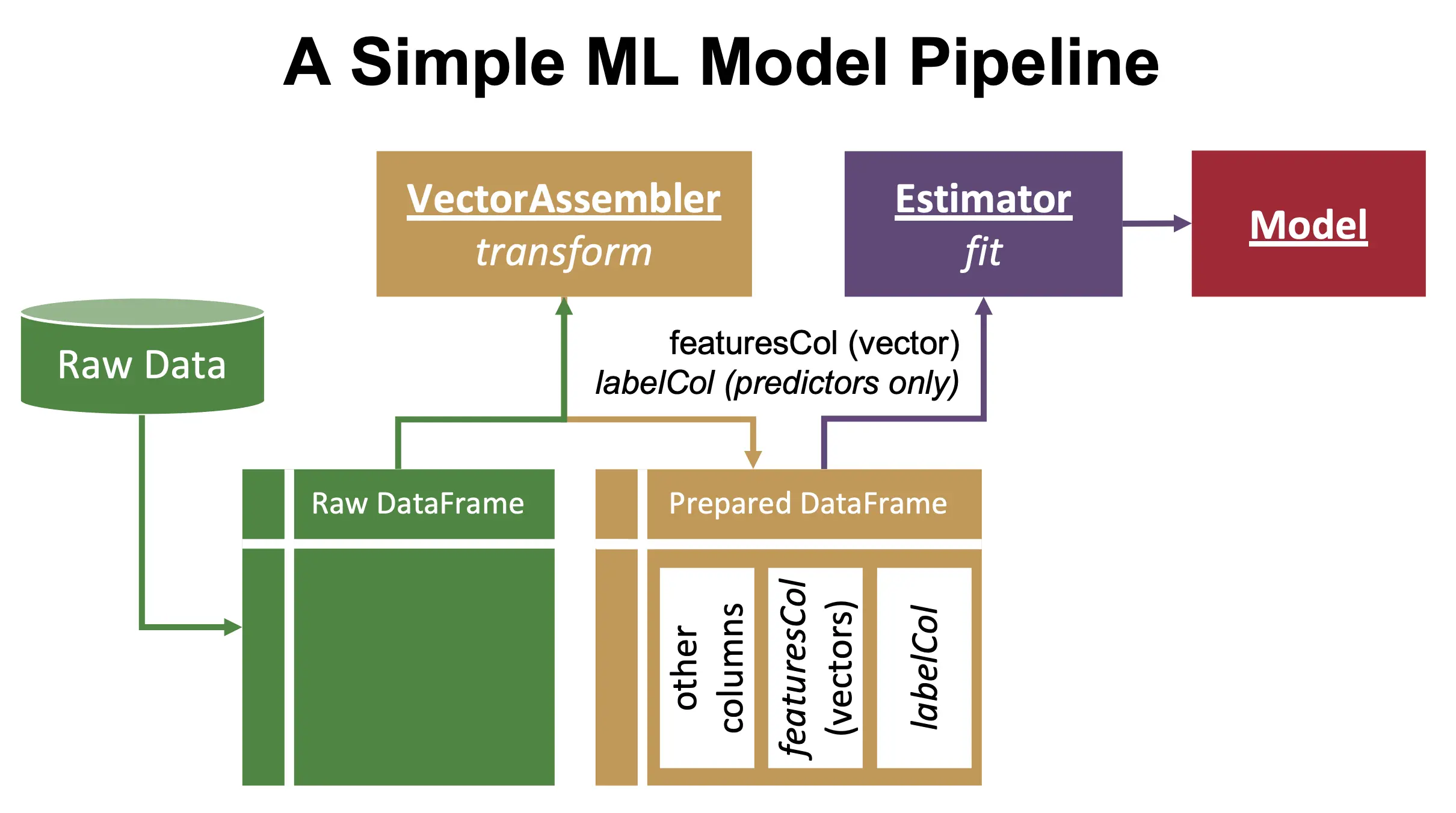

- Prepare data for training with a VectorAssembler

- A basic Random Forest Regression model

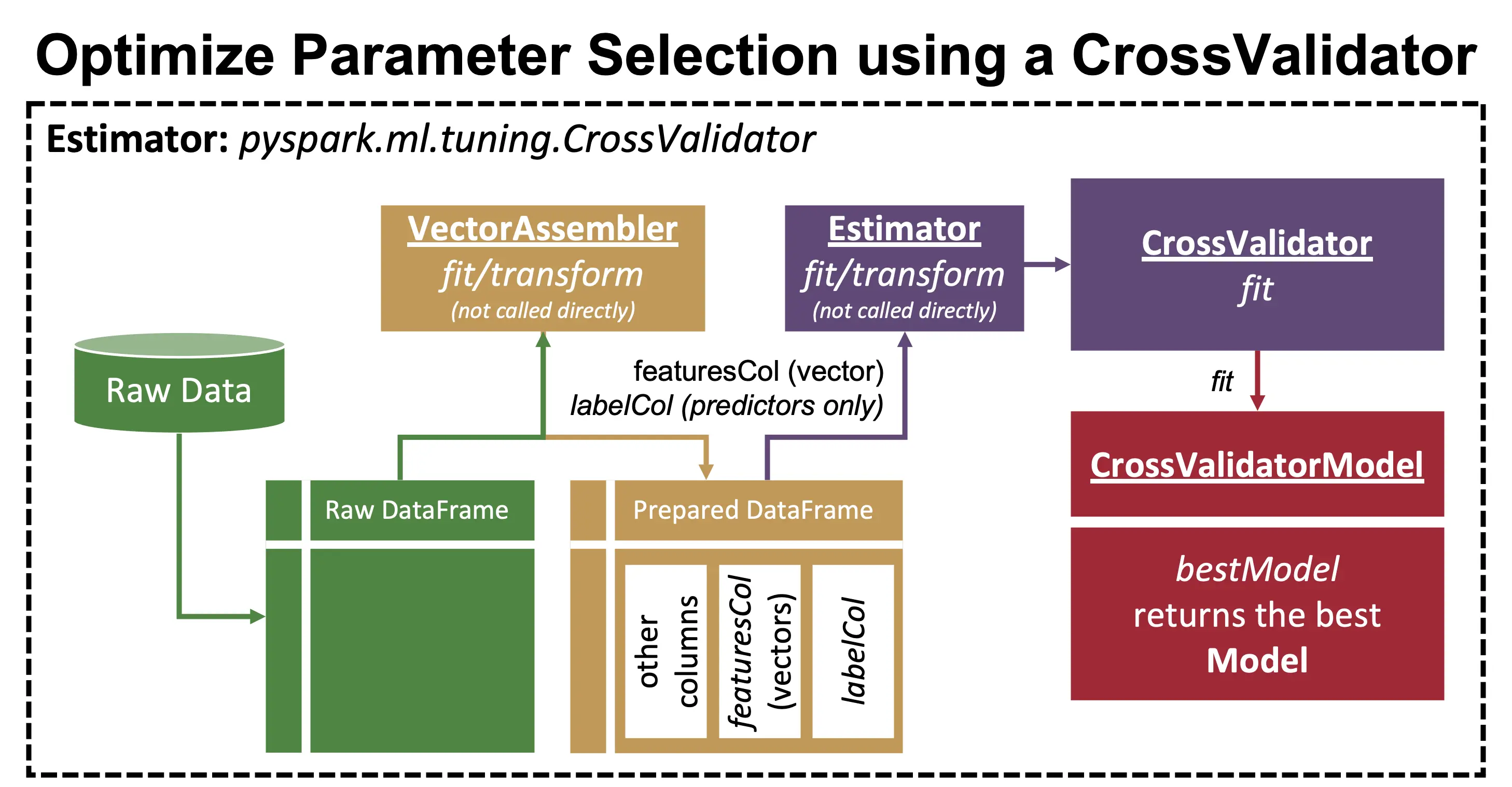

- Hyperparameter tuning

- Encode string variables as numbers

- One-hot encode a categorical variable

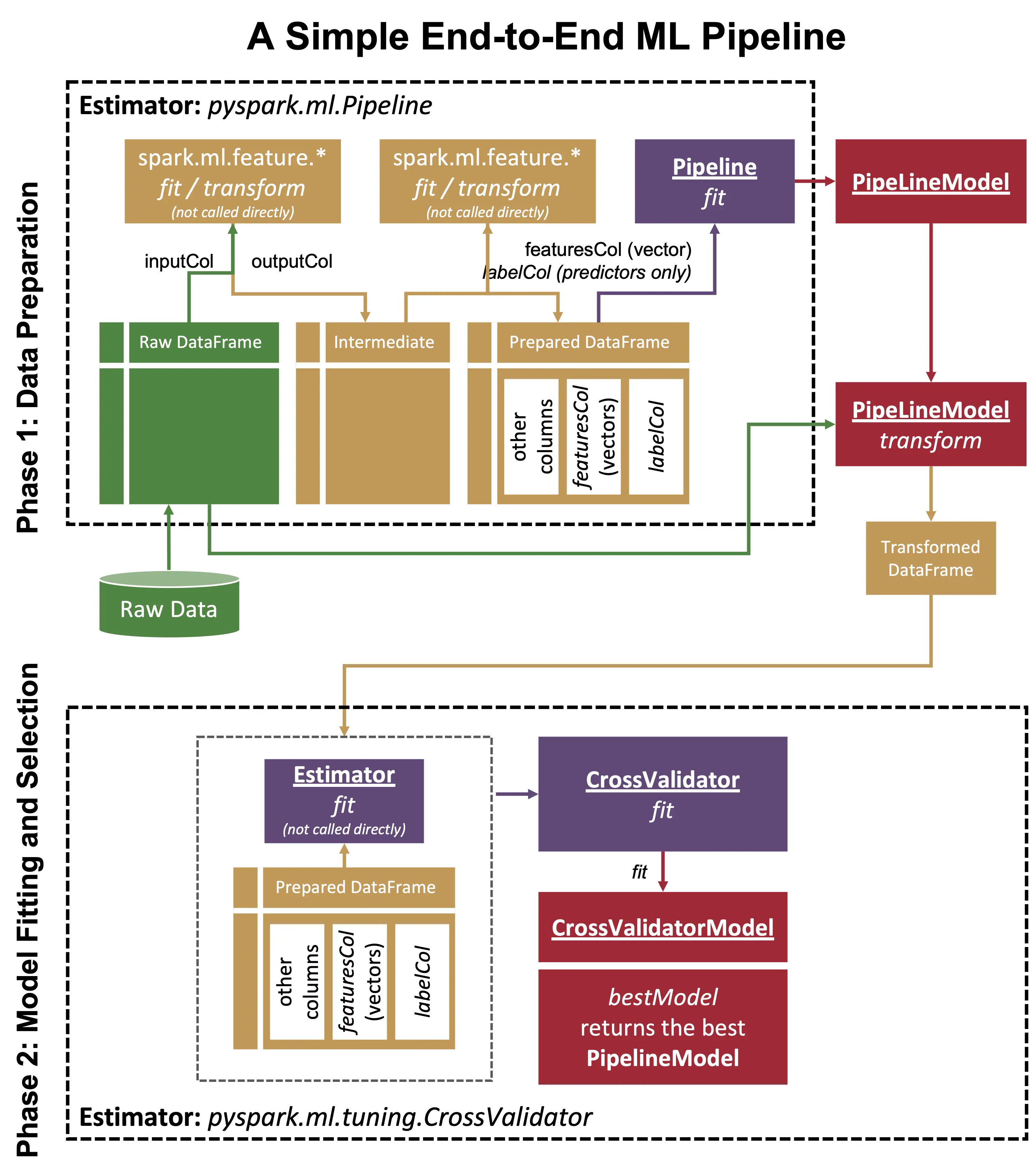

- Optimize a model after a data preparation pipeline

- Evaluate Model Performance

- Get feature importances of a trained model

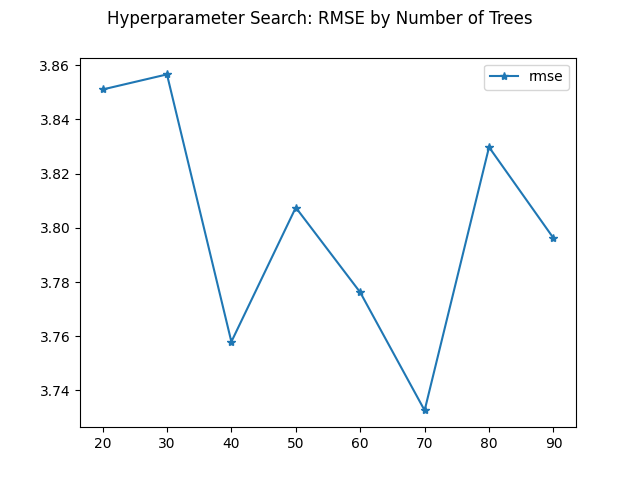

- Plot Hyperparameter tuning metrics

- Compute correlation matrix

- Save a model

- Load a model and use it for transformations

- Load a model and use it for predictions

- Load a classification model and use it to compute confidences for output labels

- Performance _ Get the Spark version _ Log messages using Spark's Log4J _ Cache a DataFrame _ Show the execution plan, with costs _ Partition by a Column Value _ Range Partition a DataFrame _ Change Number of DataFrame Partitions _ Coalesce DataFrame partitions _ Set the number of shuffle partitions _ Sample a subset of a DataFrame _ Run multiple concurrent jobs in different pools _ Print Spark configuration properties _ Set Spark configuration properties _ Publish Metrics to Graphite * Increase Spark driver/executor heap space

Accessing Data Sources

Loading data stored in filesystems or databases, and saving it.

Load a DataFrame from CSV

See https://spark.apache.org/docs/latest/api/java/org/apache/spark/sql/DataFrameReader.html for a list of supported options.

df = spark.read.format("csv").option("header", True).load("data/auto-mpg.csv")

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

|18.0| 8| 307.0| 130.0| 3504.| 12.0| 70| 1|chevrol...|

|15.0| 8| 350.0| 165.0| 3693.| 11.5| 70| 1|buick s...|

|18.0| 8| 318.0| 150.0| 3436.| 11.0| 70| 1|plymout...|

|16.0| 8| 304.0| 150.0| 3433.| 12.0| 70| 1|amc reb...|

|17.0| 8| 302.0| 140.0| 3449.| 10.5| 70| 1|ford to...|

|15.0| 8| 429.0| 198.0| 4341.| 10.0| 70| 1|ford ga...|

|14.0| 8| 454.0| 220.0| 4354.| 9.0| 70| 1|chevrol...|

|14.0| 8| 440.0| 215.0| 4312.| 8.5| 70| 1|plymout...|

|14.0| 8| 455.0| 225.0| 4425.| 10.0| 70| 1|pontiac...|

|15.0| 8| 390.0| 190.0| 3850.| 8.5| 70| 1|amc amb...|

+----+---------+------------+----------+------+------------+---------+------+----------+

only showing top 10 rows

Load a DataFrame from a Tab Separated Value (TSV) file

See https://spark.apache.org/docs/latest/api/java/org/apache/spark/sql/DataFrameReader.html for a list of supported options.

df = (

spark.read.format("csv")

.option("header", True)

.option("sep", "\t")

.load("data/auto-mpg.tsv")

)

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

|18.0| 8| 307.0| 130.0| 3504.| 12.0| 70| 1|chevrol...|

|15.0| 8| 350.0| 165.0| 3693.| 11.5| 70| 1|buick s...|

|18.0| 8| 318.0| 150.0| 3436.| 11.0| 70| 1|plymout...|

|16.0| 8| 304.0| 150.0| 3433.| 12.0| 70| 1|amc reb...|

|17.0| 8| 302.0| 140.0| 3449.| 10.5| 70| 1|ford to...|

|15.0| 8| 429.0| 198.0| 4341.| 10.0| 70| 1|ford ga...|

|14.0| 8| 454.0| 220.0| 4354.| 9.0| 70| 1|chevrol...|

|14.0| 8| 440.0| 215.0| 4312.| 8.5| 70| 1|plymout...|

|14.0| 8| 455.0| 225.0| 4425.| 10.0| 70| 1|pontiac...|

|15.0| 8| 390.0| 190.0| 3850.| 8.5| 70| 1|amc amb...|

+----+---------+------------+----------+------+------------+---------+------+----------+

only showing top 10 rows

Save a DataFrame in CSV format

See https://spark.apache.org/docs/latest/api/java/org/apache/spark/sql/DataFrameWriter.html for a list of supported options.

auto_df.write.csv("output.csv")

Load a DataFrame from Parquet

df = spark.read.format("parquet").load("data/auto-mpg.parquet")

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

|18.0| 8| 307.0| 130.0| 3504.| 12.0| 70| 1|chevrol...|

|15.0| 8| 350.0| 165.0| 3693.| 11.5| 70| 1|buick s...|

|18.0| 8| 318.0| 150.0| 3436.| 11.0| 70| 1|plymout...|

|16.0| 8| 304.0| 150.0| 3433.| 12.0| 70| 1|amc reb...|

|17.0| 8| 302.0| 140.0| 3449.| 10.5| 70| 1|ford to...|

|15.0| 8| 429.0| 198.0| 4341.| 10.0| 70| 1|ford ga...|

|14.0| 8| 454.0| 220.0| 4354.| 9.0| 70| 1|chevrol...|

|14.0| 8| 440.0| 215.0| 4312.| 8.5| 70| 1|plymout...|

|14.0| 8| 455.0| 225.0| 4425.| 10.0| 70| 1|pontiac...|

|15.0| 8| 390.0| 190.0| 3850.| 8.5| 70| 1|amc amb...|

+----+---------+------------+----------+------+------------+---------+------+----------+

only showing top 10 rows

Save a DataFrame in Parquet format

auto_df.write.parquet("output.parquet")

Load a DataFrame from JSON Lines (jsonl) Formatted Data

JSON Lines / jsonl format uses one JSON document per line. If you have data with mostly regular structure this is better than nesting it in an array. See jsonlines.org

df = spark.read.json("data/weblog.jsonl")

# Code snippet result:

+----------+----------+--------+----------+----------+------+

| client| country| session| timestamp| uri| user|

+----------+----------+--------+----------+----------+------+

|{false,...|Bangladesh|55fa8213| 869196249|http://...|dde312|

|{true, ...| Niue|2fcd4a83|1031238717|http://...|9d00b9|

|{true, ...| Rwanda|013b996e| 628683372|http://...|1339d4|

|{false,...| Austria|07e8a71a|1043628668|https:/...|966312|

|{false,...| Belize|b23d05d8| 192738669|http://...|2af1e1|

|{false,...|Lao Peo...|d83dfbae|1066490444|http://...|844395|

|{false,...|French ...|e77dfaa2|1350920869|https:/...| null|

|{false,...|Turks a...|56664269| 280986223|http://...| null|

|{false,...| Ethiopia|628d6059| 881914195|https:/...|8ab45a|

|{false,...|Saint K...|85f9120c|1065114708|https:/...| null|

+----------+----------+--------+----------+----------+------+

only showing top 10 rows

Save a DataFrame into a Hive catalog table

Save a DataFrame to a Hive-compatible catalog. Use table to save in the session's current database or database.table to save

in a specific database.

auto_df.write.mode("overwrite").saveAsTable("autompg")

Load a Hive catalog table into a DataFrame

Load a DataFrame from a particular table. Use table to load from the session's current database or database.table to load from a specific database.

df = spark.table("autompg")

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

|18.0| 8| 307.0| 130.0| 3504.| 12.0| 70| 1|chevrol...|

|15.0| 8| 350.0| 165.0| 3693.| 11.5| 70| 1|buick s...|

|18.0| 8| 318.0| 150.0| 3436.| 11.0| 70| 1|plymout...|

|16.0| 8| 304.0| 150.0| 3433.| 12.0| 70| 1|amc reb...|

|17.0| 8| 302.0| 140.0| 3449.| 10.5| 70| 1|ford to...|

|15.0| 8| 429.0| 198.0| 4341.| 10.0| 70| 1|ford ga...|

|14.0| 8| 454.0| 220.0| 4354.| 9.0| 70| 1|chevrol...|

|14.0| 8| 440.0| 215.0| 4312.| 8.5| 70| 1|plymout...|

|14.0| 8| 455.0| 225.0| 4425.| 10.0| 70| 1|pontiac...|

|15.0| 8| 390.0| 190.0| 3850.| 8.5| 70| 1|amc amb...|

+----+---------+------------+----------+------+------------+---------+------+----------+

only showing top 10 rows

Load a DataFrame from a SQL query

This example shows loading a DataFrame from a query run over the a table in a Hive-compatible catalog.

df = sqlContext.sql(

"select carname, mpg, horsepower from autompg where horsepower > 100 and mpg > 25"

)

# Code snippet result:

+----------+----+----------+

| carname| mpg|horsepower|

+----------+----+----------+

| bmw 2002|26.0| 113.0|

|chevrol...|28.8| 115.0|

|oldsmob...|26.8| 115.0|

|dodge colt|27.9| 105.0|

|datsun ...|32.7| 132.0|

|oldsmob...|26.6| 105.0|

+----------+----+----------+

Load a CSV file from Amazon S3

This example shows how to load a CSV file from AWS S3. This example uses a credential pair and the SimpleAWSCredentialsProvider. For other authentication options, refer to the Hadoop-AWS module documentation.

import configparser

import os

config = configparser.ConfigParser()

config.read(os.path.expanduser("~/.aws/credentials"))

access_key = config.get("default", "aws_access_key_id").replace('"', "")

secret_key = config.get("default", "aws_secret_access_key").replace('"', "")

# Requires compatible hadoop-aws and aws-java-sdk-bundle JARs.

spark.conf.set(

"fs.s3a.aws.credentials.provider",

"org.apache.hadoop.fs.s3a.SimpleAWSCredentialsProvider",

)

spark.conf.set("fs.s3a.access.key", access_key)

spark.conf.set("fs.s3a.secret.key", secret_key)

df = (

spark.read.format("csv")

.option("header", True)

.load("s3a://cheatsheet111/auto-mpg.csv")

)

Load a CSV file from Oracle Cloud Infrastructure (OCI) Object Storage

This example shows loading data from Oracle Cloud Infrastructure Object Storage using an API key.

import oci

oci_config = oci.config.from_file()

conf = spark.sparkContext.getConf()

conf.set("fs.oci.client.auth.tenantId", oci_config["tenancy"])

conf.set("fs.oci.client.auth.userId", oci_config["user"])

conf.set("fs.oci.client.auth.fingerprint", oci_config["fingerprint"])

conf.set("fs.oci.client.auth.pemfilepath", oci_config["key_file"])

conf.set(

"fs.oci.client.hostname",

"https://objectstorage.{0}.oraclecloud.com".format(oci_config["region"]),

)

PATH = "oci://<your_bucket>@<your_namespace/<your_path>"

df = spark.read.format("csv").option("header", True).load(PATH)

Read an Oracle DB table into a DataFrame using a Wallet

Get the tnsname from tnsnames.ora. The wallet path should point to an extracted wallet file. The wallet files need to be available on all nodes.

password = "my_password"

table = "source_table"

tnsname = "my_tns_name"

user = "ADMIN"

wallet_path = "/path/to/your/wallet"

properties = {

"driver": "oracle.jdbc.driver.OracleDriver",

"oracle.net.tns_admin": tnsname,

"password": password,

"user": user,

}

url = f"jdbc:oracle:thin:@{tnsname}?TNS_ADMIN={wallet_path}"

df = spark.read.jdbc(url=url, table=table, properties=properties)

Write a DataFrame to an Oracle DB table using a Wallet

Get the tnsname from tnsnames.ora. The wallet path should point to an extracted wallet file. The wallet files need to be available on all nodes.

password = "my_password"

table = "target_table"

tnsname = "my_tns_name"

user = "ADMIN"

wallet_path = "/path/to/your/wallet"

properties = {

"driver": "oracle.jdbc.driver.OracleDriver",

"oracle.net.tns_admin": tnsname,

"password": password,

"user": user,

}

url = f"jdbc:oracle:thin:@{tnsname}?TNS_ADMIN={wallet_path}"

# Possible modes are "Append", "Overwrite", "Ignore", "Error"

df.write.jdbc(url=url, table=table, mode="Append", properties=properties)

Write a DataFrame to a Postgres table

You need a Postgres JDBC driver to connect to a Postgres database.

Options include:

- Add

org.postgresql:postgresql:<version>tospark.jars.packages - Provide the JDBC driver using

spark-submit --jars - Add the JDBC driver to your Spark runtime (not recommended)

If you use Delta Lake there is a special procedure for specifying spark.jars.packages, see the source code that generates this file for details.

pg_database = os.environ.get("PGDATABASE") or "postgres"

pg_host = os.environ.get("PGHOST") or "localhost"

pg_password = os.environ.get("PGPASSWORD") or "password"

pg_user = os.environ.get("PGUSER") or "postgres"

table = "autompg"

properties = {

"driver": "org.postgresql.Driver",

"user": pg_user,

"password": pg_password,

}

url = f"jdbc:postgresql://{pg_host}:5432/{pg_database}"

auto_df.write.jdbc(url=url, table=table, mode="Append", properties=properties)

Read a Postgres table into a DataFrame

You need a Postgres JDBC driver to connect to a Postgres database.

Options include:

- Add

org.postgresql:postgresql:<version>tospark.jars.packages - Provide the JDBC driver using

spark-submit --jars - Add the JDBC driver to your Spark runtime (not recommended)

pg_database = os.environ.get("PGDATABASE") or "postgres"

pg_host = os.environ.get("PGHOST") or "localhost"

pg_password = os.environ.get("PGPASSWORD") or "password"

pg_user = os.environ.get("PGUSER") or "postgres"

table = "autompg"

properties = {

"driver": "org.postgresql.Driver",

"user": pg_user,

"password": pg_password,

}

url = f"jdbc:postgresql://{pg_host}:5432/{pg_database}"

df = spark.read.jdbc(url=url, table=table, properties=properties)

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

|18.0| 8| 307.0| 130.0| 3504.| 12.0| 70| 1|chevrol...|

|15.0| 8| 350.0| 165.0| 3693.| 11.5| 70| 1|buick s...|

|18.0| 8| 318.0| 150.0| 3436.| 11.0| 70| 1|plymout...|

|16.0| 8| 304.0| 150.0| 3433.| 12.0| 70| 1|amc reb...|

|17.0| 8| 302.0| 140.0| 3449.| 10.5| 70| 1|ford to...|

|15.0| 8| 429.0| 198.0| 4341.| 10.0| 70| 1|ford ga...|

|14.0| 8| 454.0| 220.0| 4354.| 9.0| 70| 1|chevrol...|

|14.0| 8| 440.0| 215.0| 4312.| 8.5| 70| 1|plymout...|

|14.0| 8| 455.0| 225.0| 4425.| 10.0| 70| 1|pontiac...|

|15.0| 8| 390.0| 190.0| 3850.| 8.5| 70| 1|amc amb...|

+----+---------+------------+----------+------+------------+---------+------+----------+

only showing top 10 rows

Data Handling Options

Special data handling scenarios.

Provide the schema when loading a DataFrame from CSV

See https://spark.apache.org/docs/latest/api/python/_modules/pyspark/sql/types.html for a list of types.

from pyspark.sql.types import (

DoubleType,

IntegerType,

StringType,

StructField,

StructType,

)

schema = StructType(

[

StructField("mpg", DoubleType(), True),

StructField("cylinders", IntegerType(), True),

StructField("displacement", DoubleType(), True),

StructField("horsepower", DoubleType(), True),

StructField("weight", DoubleType(), True),

StructField("acceleration", DoubleType(), True),

StructField("modelyear", IntegerType(), True),

StructField("origin", IntegerType(), True),

StructField("carname", StringType(), True),

]

)

df = (

spark.read.format("csv")

.option("header", "true")

.schema(schema)

.load("data/auto-mpg.csv")

)

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

|18.0| 8| 307.0| 130.0|3504.0| 12.0| 70| 1|chevrol...|

|15.0| 8| 350.0| 165.0|3693.0| 11.5| 70| 1|buick s...|

|18.0| 8| 318.0| 150.0|3436.0| 11.0| 70| 1|plymout...|

|16.0| 8| 304.0| 150.0|3433.0| 12.0| 70| 1|amc reb...|

|17.0| 8| 302.0| 140.0|3449.0| 10.5| 70| 1|ford to...|

|15.0| 8| 429.0| 198.0|4341.0| 10.0| 70| 1|ford ga...|

|14.0| 8| 454.0| 220.0|4354.0| 9.0| 70| 1|chevrol...|

|14.0| 8| 440.0| 215.0|4312.0| 8.5| 70| 1|plymout...|

|14.0| 8| 455.0| 225.0|4425.0| 10.0| 70| 1|pontiac...|

|15.0| 8| 390.0| 190.0|3850.0| 8.5| 70| 1|amc amb...|

+----+---------+------------+----------+------+------------+---------+------+----------+

only showing top 10 rows

Save a DataFrame to CSV, overwriting existing data

auto_df.write.mode("overwrite").csv("output.csv")

Save a DataFrame to CSV with a header

See https://spark.apache.org/docs/latest/api/java/org/apache/spark/sql/DataFrameWriter.html for a list of supported options.

auto_df.coalesce(1).write.csv("header.csv", header="true")

Save a DataFrame in a single CSV file

This example outputs CSV data to a single file. The file will be written in a directory called single.csv and have a random name. There is no way to change this behavior.

If you need to write to a single file with a name you choose, consider converting it to a Pandas dataframe and saving it using Pandas.

Either way all data will be collected on one node before being written so be careful not to run out of memory.

auto_df.coalesce(1).write.csv("single.csv")

Save DataFrame as a dynamic partitioned table

When you write using dynamic partitioning, the output partitions are determined bby the values of a column rather than specified in code.

The values of the partitions will appear as subdirectories and are not contained in the output files, i.e. they become "virtual columns". When you read a partition table these virtual columns will be part of the DataFrame.

Dynamic partitioning has the potential to create many small files, this will impact performance negatively. Be sure the partition columns do not have too many distinct values and limit the use of multiple virtual columns.

spark.conf.set("spark.sql.sources.partitionOverwriteMode", "dynamic")

auto_df.write.mode("append").partitionBy("modelyear").saveAsTable(

"autompg_partitioned"

)

Overwrite specific partitions

Enabling dynamic partitioning lets you add or overwrite partitions based on DataFrame contents. Without dynamic partitioning the overwrite will overwrite the entire table.

With dynamic partitioning, partitions with keys in the DataFrame are overwritten, but partitions not in the DataFrame are untouched.

spark.conf.set("spark.sql.sources.partitionOverwriteMode", "dynamic")

your_dataframe.write.mode("overwrite").insertInto("your_table")

Load a CSV file with a money column into a DataFrame

Spark is not that smart when it comes to parsing numbers, not allowing things like commas. If you need to load monetary amounts the safest option is to use a parsing library like money_parser.

from pyspark.sql.functions import udf

from pyspark.sql.types import DecimalType

from decimal import Decimal

# Load the text file.

df = (

spark.read.format("csv")

.option("header", True)

.load("data/customer_spend.csv")

)

# Convert with a hardcoded custom UDF.

money_udf = udf(lambda x: Decimal(x[1:].replace(",", "")), DecimalType(8, 4))

money1 = df.withColumn("spend_dollars", money_udf(df.spend_dollars))

# Convert with the money_parser library (much safer).

from money_parser import price_str

money_convert = udf(

lambda x: Decimal(price_str(x)) if x is not None else None,

DecimalType(8, 4),

)

df = df.withColumn("spend_dollars", money_convert(df.spend_dollars))

# Code snippet result:

+----------+-----------+-------------+

| date|customer_id|spend_dollars|

+----------+-----------+-------------+

|2020-01-31| 0| 0.0700|

|2020-01-31| 1| 0.9800|

|2020-01-31| 2| 0.0600|

|2020-01-31| 3| 0.6500|

|2020-01-31| 4| 0.5700|

|2020-02-29| 0| 0.1000|

|2020-02-29| 2| 4.4000|

|2020-02-29| 3| 0.3900|

|2020-02-29| 4| 2.1300|

|2020-02-29| 5| 0.8200|

+----------+-----------+-------------+

only showing top 10 rows

DataFrame Operations

Adding, removing and modifying DataFrame columns.

Add a new column to a DataFrame

withColumn returns a new DataFrame with a column added to the source DataFrame. withColumn can be chained together multiple times.

from pyspark.sql.functions import upper, lower

df = auto_df.withColumn("upper", upper(auto_df.carname)).withColumn(

"lower", lower(auto_df.carname)

)

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+----------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname| upper| lower|

+----+---------+------------+----------+------+------------+---------+------+----------+----------+----------+

|18.0| 8| 307.0| 130.0| 3504.| 12.0| 70| 1|chevrol...|CHEVROL...|chevrol...|

|15.0| 8| 350.0| 165.0| 3693.| 11.5| 70| 1|buick s...|BUICK S...|buick s...|

|18.0| 8| 318.0| 150.0| 3436.| 11.0| 70| 1|plymout...|PLYMOUT...|plymout...|

|16.0| 8| 304.0| 150.0| 3433.| 12.0| 70| 1|amc reb...|AMC REB...|amc reb...|

|17.0| 8| 302.0| 140.0| 3449.| 10.5| 70| 1|ford to...|FORD TO...|ford to...|

|15.0| 8| 429.0| 198.0| 4341.| 10.0| 70| 1|ford ga...|FORD GA...|ford ga...|

|14.0| 8| 454.0| 220.0| 4354.| 9.0| 70| 1|chevrol...|CHEVROL...|chevrol...|

|14.0| 8| 440.0| 215.0| 4312.| 8.5| 70| 1|plymout...|PLYMOUT...|plymout...|

|14.0| 8| 455.0| 225.0| 4425.| 10.0| 70| 1|pontiac...|PONTIAC...|pontiac...|

|15.0| 8| 390.0| 190.0| 3850.| 8.5| 70| 1|amc amb...|AMC AMB...|amc amb...|

+----+---------+------------+----------+------+------------+---------+------+----------+----------+----------+

only showing top 10 rows

Modify a DataFrame column

Modify a column in-place using withColumn, specifying the output column name to be the same as the existing column name.

from pyspark.sql.functions import col, concat, lit

df = auto_df.withColumn("modelyear", concat(lit("19"), col("modelyear")))

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

|18.0| 8| 307.0| 130.0| 3504.| 12.0| 1970| 1|chevrol...|

|15.0| 8| 350.0| 165.0| 3693.| 11.5| 1970| 1|buick s...|

|18.0| 8| 318.0| 150.0| 3436.| 11.0| 1970| 1|plymout...|

|16.0| 8| 304.0| 150.0| 3433.| 12.0| 1970| 1|amc reb...|

|17.0| 8| 302.0| 140.0| 3449.| 10.5| 1970| 1|ford to...|

|15.0| 8| 429.0| 198.0| 4341.| 10.0| 1970| 1|ford ga...|

|14.0| 8| 454.0| 220.0| 4354.| 9.0| 1970| 1|chevrol...|

|14.0| 8| 440.0| 215.0| 4312.| 8.5| 1970| 1|plymout...|

|14.0| 8| 455.0| 225.0| 4425.| 10.0| 1970| 1|pontiac...|

|15.0| 8| 390.0| 190.0| 3850.| 8.5| 1970| 1|amc amb...|

+----+---------+------------+----------+------+------------+---------+------+----------+

only showing top 10 rows

Add a column with multiple conditions

To set a new column's values when using withColumn, use the when / otherwise idiom. Multiple when conditions can be chained together.

from pyspark.sql.functions import col, when

df = auto_df.withColumn(

"mpg_class",

when(col("mpg") <= 20, "low")

.when(col("mpg") <= 30, "mid")

.when(col("mpg") <= 40, "high")

.otherwise("very high"),

)

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+---------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|mpg_class|

+----+---------+------------+----------+------+------------+---------+------+----------+---------+

|18.0| 8| 307.0| 130.0| 3504.| 12.0| 70| 1|chevrol...| low|

|15.0| 8| 350.0| 165.0| 3693.| 11.5| 70| 1|buick s...| low|

|18.0| 8| 318.0| 150.0| 3436.| 11.0| 70| 1|plymout...| low|

|16.0| 8| 304.0| 150.0| 3433.| 12.0| 70| 1|amc reb...| low|

|17.0| 8| 302.0| 140.0| 3449.| 10.5| 70| 1|ford to...| low|

|15.0| 8| 429.0| 198.0| 4341.| 10.0| 70| 1|ford ga...| low|

|14.0| 8| 454.0| 220.0| 4354.| 9.0| 70| 1|chevrol...| low|

|14.0| 8| 440.0| 215.0| 4312.| 8.5| 70| 1|plymout...| low|

|14.0| 8| 455.0| 225.0| 4425.| 10.0| 70| 1|pontiac...| low|

|15.0| 8| 390.0| 190.0| 3850.| 8.5| 70| 1|amc amb...| low|

+----+---------+------------+----------+------+------------+---------+------+----------+---------+

only showing top 10 rows

Add a constant column

from pyspark.sql.functions import lit

df = auto_df.withColumn("one", lit(1))

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+---+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|one|

+----+---------+------------+----------+------+------------+---------+------+----------+---+

|18.0| 8| 307.0| 130.0| 3504.| 12.0| 70| 1|chevrol...| 1|

|15.0| 8| 350.0| 165.0| 3693.| 11.5| 70| 1|buick s...| 1|

|18.0| 8| 318.0| 150.0| 3436.| 11.0| 70| 1|plymout...| 1|

|16.0| 8| 304.0| 150.0| 3433.| 12.0| 70| 1|amc reb...| 1|

|17.0| 8| 302.0| 140.0| 3449.| 10.5| 70| 1|ford to...| 1|

|15.0| 8| 429.0| 198.0| 4341.| 10.0| 70| 1|ford ga...| 1|

|14.0| 8| 454.0| 220.0| 4354.| 9.0| 70| 1|chevrol...| 1|

|14.0| 8| 440.0| 215.0| 4312.| 8.5| 70| 1|plymout...| 1|

|14.0| 8| 455.0| 225.0| 4425.| 10.0| 70| 1|pontiac...| 1|

|15.0| 8| 390.0| 190.0| 3850.| 8.5| 70| 1|amc amb...| 1|

+----+---------+------------+----------+------+------------+---------+------+----------+---+

only showing top 10 rows

Concatenate columns

TODO

from pyspark.sql.functions import concat, col, lit

df = auto_df.withColumn(

"concatenated", concat(col("cylinders"), lit("_"), col("mpg"))

)

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+------------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|concatenated|

+----+---------+------------+----------+------+------------+---------+------+----------+------------+

|18.0| 8| 307.0| 130.0| 3504.| 12.0| 70| 1|chevrol...| 8_18.0|

|15.0| 8| 350.0| 165.0| 3693.| 11.5| 70| 1|buick s...| 8_15.0|

|18.0| 8| 318.0| 150.0| 3436.| 11.0| 70| 1|plymout...| 8_18.0|

|16.0| 8| 304.0| 150.0| 3433.| 12.0| 70| 1|amc reb...| 8_16.0|

|17.0| 8| 302.0| 140.0| 3449.| 10.5| 70| 1|ford to...| 8_17.0|

|15.0| 8| 429.0| 198.0| 4341.| 10.0| 70| 1|ford ga...| 8_15.0|

|14.0| 8| 454.0| 220.0| 4354.| 9.0| 70| 1|chevrol...| 8_14.0|

|14.0| 8| 440.0| 215.0| 4312.| 8.5| 70| 1|plymout...| 8_14.0|

|14.0| 8| 455.0| 225.0| 4425.| 10.0| 70| 1|pontiac...| 8_14.0|

|15.0| 8| 390.0| 190.0| 3850.| 8.5| 70| 1|amc amb...| 8_15.0|

+----+---------+------------+----------+------+------------+---------+------+----------+------------+

only showing top 10 rows

Drop a column

df = auto_df.drop("horsepower")

# Code snippet result:

+----+---------+------------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+------+------------+---------+------+----------+

|18.0| 8| 307.0| 3504.| 12.0| 70| 1|chevrol...|

|15.0| 8| 350.0| 3693.| 11.5| 70| 1|buick s...|

|18.0| 8| 318.0| 3436.| 11.0| 70| 1|plymout...|

|16.0| 8| 304.0| 3433.| 12.0| 70| 1|amc reb...|

|17.0| 8| 302.0| 3449.| 10.5| 70| 1|ford to...|

|15.0| 8| 429.0| 4341.| 10.0| 70| 1|ford ga...|

|14.0| 8| 454.0| 4354.| 9.0| 70| 1|chevrol...|

|14.0| 8| 440.0| 4312.| 8.5| 70| 1|plymout...|

|14.0| 8| 455.0| 4425.| 10.0| 70| 1|pontiac...|

|15.0| 8| 390.0| 3850.| 8.5| 70| 1|amc amb...|

+----+---------+------------+------+------------+---------+------+----------+

only showing top 10 rows

Change a column name

df = auto_df.withColumnRenamed("horsepower", "horses")

# Code snippet result:

+----+---------+------------+------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horses|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+------+------+------------+---------+------+----------+

|18.0| 8| 307.0| 130.0| 3504.| 12.0| 70| 1|chevrol...|

|15.0| 8| 350.0| 165.0| 3693.| 11.5| 70| 1|buick s...|

|18.0| 8| 318.0| 150.0| 3436.| 11.0| 70| 1|plymout...|

|16.0| 8| 304.0| 150.0| 3433.| 12.0| 70| 1|amc reb...|

|17.0| 8| 302.0| 140.0| 3449.| 10.5| 70| 1|ford to...|

|15.0| 8| 429.0| 198.0| 4341.| 10.0| 70| 1|ford ga...|

|14.0| 8| 454.0| 220.0| 4354.| 9.0| 70| 1|chevrol...|

|14.0| 8| 440.0| 215.0| 4312.| 8.5| 70| 1|plymout...|

|14.0| 8| 455.0| 225.0| 4425.| 10.0| 70| 1|pontiac...|

|15.0| 8| 390.0| 190.0| 3850.| 8.5| 70| 1|amc amb...|

+----+---------+------------+------+------+------------+---------+------+----------+

only showing top 10 rows

Change multiple column names

If you need to change multiple column names you can chain withColumnRenamed calls together. If you want to change all column names see "Change all column names at once".

df = auto_df.withColumnRenamed("horsepower", "horses").withColumnRenamed(

"modelyear", "year"

)

# Code snippet result:

+----+---------+------------+------+------+------------+----+------+----------+

| mpg|cylinders|displacement|horses|weight|acceleration|year|origin| carname|

+----+---------+------------+------+------+------------+----+------+----------+

|18.0| 8| 307.0| 130.0| 3504.| 12.0| 70| 1|chevrol...|

|15.0| 8| 350.0| 165.0| 3693.| 11.5| 70| 1|buick s...|

|18.0| 8| 318.0| 150.0| 3436.| 11.0| 70| 1|plymout...|

|16.0| 8| 304.0| 150.0| 3433.| 12.0| 70| 1|amc reb...|

|17.0| 8| 302.0| 140.0| 3449.| 10.5| 70| 1|ford to...|

|15.0| 8| 429.0| 198.0| 4341.| 10.0| 70| 1|ford ga...|

|14.0| 8| 454.0| 220.0| 4354.| 9.0| 70| 1|chevrol...|

|14.0| 8| 440.0| 215.0| 4312.| 8.5| 70| 1|plymout...|

|14.0| 8| 455.0| 225.0| 4425.| 10.0| 70| 1|pontiac...|

|15.0| 8| 390.0| 190.0| 3850.| 8.5| 70| 1|amc amb...|

+----+---------+------------+------+------+------------+----+------+----------+

only showing top 10 rows

Change all column names at once

To rename all columns use toDF with the desired column names in the argument list. This example puts an X in front of all column names.

df = auto_df.toDF(*["X" + name for name in auto_df.columns])

# Code snippet result:

+----+----------+-------------+-----------+-------+-------------+----------+-------+----------+

|Xmpg|Xcylinders|Xdisplacement|Xhorsepower|Xweight|Xacceleration|Xmodelyear|Xorigin| Xcarname|

+----+----------+-------------+-----------+-------+-------------+----------+-------+----------+

|18.0| 8| 307.0| 130.0| 3504.| 12.0| 70| 1|chevrol...|

|15.0| 8| 350.0| 165.0| 3693.| 11.5| 70| 1|buick s...|

|18.0| 8| 318.0| 150.0| 3436.| 11.0| 70| 1|plymout...|

|16.0| 8| 304.0| 150.0| 3433.| 12.0| 70| 1|amc reb...|

|17.0| 8| 302.0| 140.0| 3449.| 10.5| 70| 1|ford to...|

|15.0| 8| 429.0| 198.0| 4341.| 10.0| 70| 1|ford ga...|

|14.0| 8| 454.0| 220.0| 4354.| 9.0| 70| 1|chevrol...|

|14.0| 8| 440.0| 215.0| 4312.| 8.5| 70| 1|plymout...|

|14.0| 8| 455.0| 225.0| 4425.| 10.0| 70| 1|pontiac...|

|15.0| 8| 390.0| 190.0| 3850.| 8.5| 70| 1|amc amb...|

+----+----------+-------------+-----------+-------+-------------+----------+-------+----------+

only showing top 10 rows

Convert a DataFrame column to a Python list

Steps below:

selectthe target column, this example usescarname.- Access the DataFrame's rdd using

.rdd - Use

flatMapto convert the rdd'sRowobjects into simple values. - Use

collectto assemble everything into a list.

names = auto_df.select("carname").rdd.flatMap(lambda x: x).collect()

print(str(names[:10]))

# Code snippet result:

['chevrolet chevelle malibu', 'buick skylark 320', 'plymouth satellite', 'amc rebel sst', 'ford torino', 'ford galaxie 500', 'chevrolet impala', 'plymouth fury iii', 'pontiac catalina', 'amc ambassador dpl']

Convert a scalar query to a Python value

If you have a DataFrame with one row and one column, how do you access its value?

Steps below:

- Create a DataFrame with one row and one column, this example uses an average but it could be anything.

- Call the DataFrame's

firstmethod, this returns the firstRowof the DataFrame. Rows can be accessed like arrays, so we extract the zeroth value of the firstRowusingfirst()[0].

average = auto_df.agg(dict(mpg="avg")).first()[0]

print(str(average))

# Code snippet result:

23.514572864321615

Consume a DataFrame row-wise as Python dictionaries

Steps below:

collectall DataFrame Rows in the driver.- Iterate over the Rows.

- Call the Row's

asDictmethod to convert the Row to a Python dictionary.

first_three = auto_df.limit(3)

for row in first_three.collect():

my_dict = row.asDict()

print(my_dict)

# Code snippet result:

{'mpg': '18.0', 'cylinders': '8', 'displacement': '307.0', 'horsepower': '130.0', 'weight': '3504.', 'acceleration': '12.0', 'modelyear': '70', 'origin': '1', 'carname': 'chevrolet chevelle malibu'}

{'mpg': '15.0', 'cylinders': '8', 'displacement': '350.0', 'horsepower': '165.0', 'weight': '3693.', 'acceleration': '11.5', 'modelyear': '70', 'origin': '1', 'carname': 'buick skylark 320'}

{'mpg': '18.0', 'cylinders': '8', 'displacement': '318.0', 'horsepower': '150.0', 'weight': '3436.', 'acceleration': '11.0', 'modelyear': '70', 'origin': '1', 'carname': 'plymouth satellite'}

Select particular columns from a DataFrame

df = auto_df.select(["mpg", "cylinders", "displacement"])

# Code snippet result:

+----+---------+------------+

| mpg|cylinders|displacement|

+----+---------+------------+

|18.0| 8| 307.0|

|15.0| 8| 350.0|

|18.0| 8| 318.0|

|16.0| 8| 304.0|

|17.0| 8| 302.0|

|15.0| 8| 429.0|

|14.0| 8| 454.0|

|14.0| 8| 440.0|

|14.0| 8| 455.0|

|15.0| 8| 390.0|

+----+---------+------------+

only showing top 10 rows

Create an empty dataframe with a specified schema

You can create an empty DataFrame the same way you create other in-line DataFrames, but using an empty list.

from pyspark.sql.types import StructField, StructType, LongType, StringType

schema = StructType(

[

StructField("my_id", LongType(), True),

StructField("my_string", StringType(), True),

]

)

df = spark.createDataFrame([], schema)

# Code snippet result:

+-----+---------+

|my_id|my_string|

+-----+---------+

+-----+---------+

Create a constant dataframe

Constant DataFrames are mostly useful for unit tests.

import datetime

from pyspark.sql.types import (

StructField,

StructType,

LongType,

StringType,

TimestampType,

)

schema = StructType(

[

StructField("my_id", LongType(), True),

StructField("my_string", StringType(), True),

StructField("my_timestamp", TimestampType(), True),

]

)

df = spark.createDataFrame(

[

(1, "foo", datetime.datetime.strptime("2021-01-01", "%Y-%m-%d")),

(2, "bar", datetime.datetime.strptime("2021-01-02", "%Y-%m-%d")),

],

schema,

)

# Code snippet result:

+-----+---------+------------+

|my_id|my_string|my_timestamp|

+-----+---------+------------+

| 1| foo| 2021-01...|

| 2| bar| 2021-01...|

+-----+---------+------------+

Convert String to Double

from pyspark.sql.functions import col

df = auto_df.withColumn("horsepower", col("horsepower").cast("double"))

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

|18.0| 8| 307.0| 130.0| 3504.| 12.0| 70| 1|chevrol...|

|15.0| 8| 350.0| 165.0| 3693.| 11.5| 70| 1|buick s...|

|18.0| 8| 318.0| 150.0| 3436.| 11.0| 70| 1|plymout...|

|16.0| 8| 304.0| 150.0| 3433.| 12.0| 70| 1|amc reb...|

|17.0| 8| 302.0| 140.0| 3449.| 10.5| 70| 1|ford to...|

|15.0| 8| 429.0| 198.0| 4341.| 10.0| 70| 1|ford ga...|

|14.0| 8| 454.0| 220.0| 4354.| 9.0| 70| 1|chevrol...|

|14.0| 8| 440.0| 215.0| 4312.| 8.5| 70| 1|plymout...|

|14.0| 8| 455.0| 225.0| 4425.| 10.0| 70| 1|pontiac...|

|15.0| 8| 390.0| 190.0| 3850.| 8.5| 70| 1|amc amb...|

+----+---------+------------+----------+------+------------+---------+------+----------+

only showing top 10 rows

Convert String to Integer

from pyspark.sql.functions import col

df = auto_df.withColumn("horsepower", col("horsepower").cast("int"))

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

|18.0| 8| 307.0| 130| 3504.| 12.0| 70| 1|chevrol...|

|15.0| 8| 350.0| 165| 3693.| 11.5| 70| 1|buick s...|

|18.0| 8| 318.0| 150| 3436.| 11.0| 70| 1|plymout...|

|16.0| 8| 304.0| 150| 3433.| 12.0| 70| 1|amc reb...|

|17.0| 8| 302.0| 140| 3449.| 10.5| 70| 1|ford to...|

|15.0| 8| 429.0| 198| 4341.| 10.0| 70| 1|ford ga...|

|14.0| 8| 454.0| 220| 4354.| 9.0| 70| 1|chevrol...|

|14.0| 8| 440.0| 215| 4312.| 8.5| 70| 1|plymout...|

|14.0| 8| 455.0| 225| 4425.| 10.0| 70| 1|pontiac...|

|15.0| 8| 390.0| 190| 3850.| 8.5| 70| 1|amc amb...|

+----+---------+------------+----------+------+------------+---------+------+----------+

only showing top 10 rows

Get the size of a DataFrame

print("{} rows".format(auto_df.count()))

print("{} columns".format(len(auto_df.columns)))

# Code snippet result:

398 rows

9 columns

Get a DataFrame's number of partitions

print("{} partition(s)".format(auto_df.rdd.getNumPartitions()))

# Code snippet result:

1 partition(s)

Get data types of a DataFrame's columns

print(auto_df.dtypes)

# Code snippet result:

[('mpg', 'string'), ('cylinders', 'string'), ('displacement', 'string'), ('horsepower', 'string'), ('weight', 'string'), ('acceleration', 'string'), ('modelyear', 'string'), ('origin', 'string'), ('carname', 'string')]

Convert an RDD to Data Frame

If you have an rdd how do you convert it to a DataFrame? The rdd method toDf can be used, but the rdd must be a collection of Row objects.

Steps below:

- Create an

rddto be converted to aDataFrame. - Use the

rdd'smapmethod:- The example uses a lambda function to convert

rddelements toRows. - The

Rowconstructor request key/value pairs with the key serving as the "column name". - Each

rddentry is converted to a dictionary and the dictionary is unpacked to create theRow. mapcreates a newrddcontaining all theRowobjects.- This new

rddis converted to aDataFrameusing thetoDFmethod.

- The example uses a lambda function to convert

The second example is a variation on the first, modifying source rdd entries while creating the target rdd.

from pyspark.sql import Row

# First, get the RDD from the DataFrame.

rdd = auto_df.rdd

# This converts it back to an RDD with no changes.

df = rdd.map(lambda x: Row(**x.asDict())).toDF()

# This changes the rows before creating the DataFrame.

df = rdd.map(

lambda x: Row(**{k: v * 2 for (k, v) in x.asDict().items()})

).toDF()

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

|36.0| 16.0| 614.0| 260.0|7008.0| 24.0| 140| 2|chevrol...|

|30.0| 16.0| 700.0| 330.0|7386.0| 23.0| 140| 2|buick s...|

|36.0| 16.0| 636.0| 300.0|6872.0| 22.0| 140| 2|plymout...|

|32.0| 16.0| 608.0| 300.0|6866.0| 24.0| 140| 2|amc reb...|

|34.0| 16.0| 604.0| 280.0|6898.0| 21.0| 140| 2|ford to...|

|30.0| 16.0| 858.0| 396.0|8682.0| 20.0| 140| 2|ford ga...|

|28.0| 16.0| 908.0| 440.0|8708.0| 18.0| 140| 2|chevrol...|

|28.0| 16.0| 880.0| 430.0|8624.0| 17.0| 140| 2|plymout...|

|28.0| 16.0| 910.0| 450.0|8850.0| 20.0| 140| 2|pontiac...|

|30.0| 16.0| 780.0| 380.0|7700.0| 17.0| 140| 2|amc amb...|

+----+---------+------------+----------+------+------------+---------+------+----------+

only showing top 10 rows

Print the contents of an RDD

To see an RDD's contents, convert the output of the take method to a string.

rdd = auto_df.rdd

print(rdd.take(10))

# Code snippet result:

[Row(mpg='18.0', cylinders='8', displacement='307.0', horsepower='130.0', weight='3504.', acceleration='12.0', modelyear='70', origin='1', carname='chevrolet chevelle malibu'), Row(mpg='15.0', cylinders='8', displacement='350.0', horsepower='165.0', weight='3693.', acceleration='11.5', modelyear='70', origin='1', carname='buick skylark 320'), Row(mpg='18.0', cylinders='8', displacement='318.0', horsepower='150.0', weight='3436.', acceleration='11.0', modelyear='70', origin='1', carname='plymouth satellite'), Row(mpg='16.0', cylinders='8', displacement='304.0', horsepower='150.0', weight='3433.', acceleration='12.0', modelyear='70', origin='1', carname='amc rebel sst'), Row(mpg='17.0', cylinders='8', displacement='302.0', horsepower='140.0', weight='3449.', acceleration='10.5', modelyear='70', origin='1', carname='ford torino'), Row(mpg='15.0', cylinders='8', displacement='429.0', horsepower='198.0', weight='4341.', acceleration='10.0', modelyear='70', origin='1', carname='ford galaxie 500'), Row(mpg='14.0', cylinders='8', displacement='454.0', horsepower='220.0', weight='4354.', acceleration='9.0', modelyear='70', origin='1', carname='chevrolet impala'), Row(mpg='14.0', cylinders='8', displacement='440.0', horsepower='215.0', weight='4312.', acceleration='8.5', modelyear='70', origin='1', carname='plymouth fury iii'), Row(mpg='14.0', cylinders='8', displacement='455.0', horsepower='225.0', weight='4425.', acceleration='10.0', modelyear='70', origin='1', carname='pontiac catalina'), Row(mpg='15.0', cylinders='8', displacement='390.0', horsepower='190.0', weight='3850.', acceleration='8.5', modelyear='70', origin='1', carname='amc ambassador dpl')]

Print the contents of a DataFrame

auto_df.show(10)

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

|18.0| 8| 307.0| 130.0| 3504.| 12.0| 70| 1|chevrol...|

|15.0| 8| 350.0| 165.0| 3693.| 11.5| 70| 1|buick s...|

|18.0| 8| 318.0| 150.0| 3436.| 11.0| 70| 1|plymout...|

|16.0| 8| 304.0| 150.0| 3433.| 12.0| 70| 1|amc reb...|

|17.0| 8| 302.0| 140.0| 3449.| 10.5| 70| 1|ford to...|

|15.0| 8| 429.0| 198.0| 4341.| 10.0| 70| 1|ford ga...|

|14.0| 8| 454.0| 220.0| 4354.| 9.0| 70| 1|chevrol...|

|14.0| 8| 440.0| 215.0| 4312.| 8.5| 70| 1|plymout...|

|14.0| 8| 455.0| 225.0| 4425.| 10.0| 70| 1|pontiac...|

|15.0| 8| 390.0| 190.0| 3850.| 8.5| 70| 1|amc amb...|

+----+---------+------------+----------+------+------------+---------+------+----------+

only showing top 10 rows

Process each row of a DataFrame

Use the foreach function to process each row of a DataFrame using a Python function. The function will get one argument, a Row object. The Row will have properties whose names map to the DataFrame's columns.

import os

def foreach_function(row):

if row.horsepower is not None:

os.system("echo " + row.horsepower)

auto_df.foreach(foreach_function)

DataFrame Map example

You can run map on a DataFrame by accessing its underlying RDD. It is much more common to use foreach directly on the DataFrame itself. This can be useful if you have code written specifically for RDDs that you need to use against a DataFrame.

def map_function(row):

if row.horsepower is not None:

return [float(row.horsepower) * 10]

else:

return [None]

df = auto_df.rdd.map(map_function).toDF()

# Code snippet result:

+------+

| _1|

+------+

|1300.0|

|1650.0|

|1500.0|

|1500.0|

|1400.0|

|1980.0|

|2200.0|

|2150.0|

|2250.0|

|1900.0|

+------+

only showing top 10 rows

DataFrame Flatmap example

Use flatMap when you have a UDF that produces a list of Rows per input Row. flatMap is an RDD operation so we need to access the DataFrame's RDD, call flatMap and convert the resulting RDD back into a DataFrame. Spark will handle "flatting" arrays into the output RDD.

Note also that you can yield results rather than returning full lists which can simplify code considerably.

from pyspark.sql.types import Row

def flatmap_function(row):

if row.cylinders is not None:

return list(range(int(row.cylinders)))

else:

return [None]

rdd = auto_df.rdd.flatMap(flatmap_function)

row = Row("val")

df = rdd.map(row).toDF()

# Code snippet result:

+---+

|val|

+---+

| 0|

| 1|

| 2|

| 3|

| 4|

| 5|

| 6|

| 7|

| 0|

| 1|

+---+

only showing top 10 rows

Create a custom UDF

Create a UDF by providing a function to the udf function. This example shows a lambda function. You can also use ordinary functions for more complex UDFs.

from pyspark.sql.functions import col, udf

from pyspark.sql.types import StringType

first_word_udf = udf(lambda x: x.split()[0], StringType())

df = auto_df.withColumn("manufacturer", first_word_udf(col("carname")))

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+------------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|manufacturer|

+----+---------+------------+----------+------+------------+---------+------+----------+------------+

|18.0| 8| 307.0| 130.0| 3504.| 12.0| 70| 1|chevrol...| chevrolet|

|15.0| 8| 350.0| 165.0| 3693.| 11.5| 70| 1|buick s...| buick|

|18.0| 8| 318.0| 150.0| 3436.| 11.0| 70| 1|plymout...| plymouth|

|16.0| 8| 304.0| 150.0| 3433.| 12.0| 70| 1|amc reb...| amc|

|17.0| 8| 302.0| 140.0| 3449.| 10.5| 70| 1|ford to...| ford|

|15.0| 8| 429.0| 198.0| 4341.| 10.0| 70| 1|ford ga...| ford|

|14.0| 8| 454.0| 220.0| 4354.| 9.0| 70| 1|chevrol...| chevrolet|

|14.0| 8| 440.0| 215.0| 4312.| 8.5| 70| 1|plymout...| plymouth|

|14.0| 8| 455.0| 225.0| 4425.| 10.0| 70| 1|pontiac...| pontiac|

|15.0| 8| 390.0| 190.0| 3850.| 8.5| 70| 1|amc amb...| amc|

+----+---------+------------+----------+------+------------+---------+------+----------+------------+

only showing top 10 rows

Transforming Data

Data conversions and other modifications.

Run a SparkSQL Statement on a DataFrame

You can run arbitrary SQL statements on a DataFrame provided you:

- Register the

DataFrameas a temporary table usingregisterTempTable. - Use

sqlContext.sqland use the temp table name you specified as the table source.

You can also join DataFrames if you register them. If you're porting complex SQL from another application this can be a lot easier than converting it to use DataFrame SQL APIs.

from pyspark.sql.functions import col, regexp_extract

auto_df.registerTempTable("auto_df")

df = sqlContext.sql(

"select modelyear, avg(mpg) from auto_df group by modelyear"

)

# Code snippet result:

+---------+----------+

|modelyear| avg(mpg)|

+---------+----------+

| 73| 17.1|

| 71| 21.25|

| 70|17.6896...|

| 75|20.2666...|

| 78|24.0611...|

| 77| 23.375|

| 82|31.7096...|

| 81|30.3344...|

| 79|25.0931...|

| 72|18.7142...|

+---------+----------+

only showing top 10 rows

Extract data from a string using a regular expression

from pyspark.sql.functions import col, regexp_extract

group = 0

df = (

auto_df.withColumn(

"identifier", regexp_extract(col("carname"), "(\S?\d+)", group)

)

.drop("acceleration")

.drop("cylinders")

.drop("displacement")

.drop("modelyear")

.drop("mpg")

.drop("origin")

.drop("horsepower")

.drop("weight")

)

# Code snippet result:

+----------+----------+

| carname|identifier|

+----------+----------+

|chevrol...| |

|buick s...| 320|

|plymout...| |

|amc reb...| |

|ford to...| |

|ford ga...| 500|

|chevrol...| |

|plymout...| |

|pontiac...| |

|amc amb...| |

+----------+----------+

only showing top 10 rows

Fill NULL values in specific columns

df = auto_df.fillna({"horsepower": 0})

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

|18.0| 8| 307.0| 130.0| 3504.| 12.0| 70| 1|chevrol...|

|15.0| 8| 350.0| 165.0| 3693.| 11.5| 70| 1|buick s...|

|18.0| 8| 318.0| 150.0| 3436.| 11.0| 70| 1|plymout...|

|16.0| 8| 304.0| 150.0| 3433.| 12.0| 70| 1|amc reb...|

|17.0| 8| 302.0| 140.0| 3449.| 10.5| 70| 1|ford to...|

|15.0| 8| 429.0| 198.0| 4341.| 10.0| 70| 1|ford ga...|

|14.0| 8| 454.0| 220.0| 4354.| 9.0| 70| 1|chevrol...|

|14.0| 8| 440.0| 215.0| 4312.| 8.5| 70| 1|plymout...|

|14.0| 8| 455.0| 225.0| 4425.| 10.0| 70| 1|pontiac...|

|15.0| 8| 390.0| 190.0| 3850.| 8.5| 70| 1|amc amb...|

+----+---------+------------+----------+------+------------+---------+------+----------+

only showing top 10 rows

Fill NULL values with column average

from pyspark.sql.functions import avg

df = auto_df.fillna({"horsepower": auto_df.agg(avg("horsepower")).first()[0]})

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

|18.0| 8| 307.0| 130.0| 3504.| 12.0| 70| 1|chevrol...|

|15.0| 8| 350.0| 165.0| 3693.| 11.5| 70| 1|buick s...|

|18.0| 8| 318.0| 150.0| 3436.| 11.0| 70| 1|plymout...|

|16.0| 8| 304.0| 150.0| 3433.| 12.0| 70| 1|amc reb...|

|17.0| 8| 302.0| 140.0| 3449.| 10.5| 70| 1|ford to...|

|15.0| 8| 429.0| 198.0| 4341.| 10.0| 70| 1|ford ga...|

|14.0| 8| 454.0| 220.0| 4354.| 9.0| 70| 1|chevrol...|

|14.0| 8| 440.0| 215.0| 4312.| 8.5| 70| 1|plymout...|

|14.0| 8| 455.0| 225.0| 4425.| 10.0| 70| 1|pontiac...|

|15.0| 8| 390.0| 190.0| 3850.| 8.5| 70| 1|amc amb...|

+----+---------+------------+----------+------+------------+---------+------+----------+

only showing top 10 rows

Fill NULL values with group average

Sometimes NULL values in a column cause problems and it's better to guess at a value than leave it NULL. There are several strategies for doing with this. This example shows replacing NULL values with the average value within that column.

from pyspark.sql.functions import coalesce

unmodified_columns = auto_df.columns

unmodified_columns.remove("horsepower")

manufacturer_avg = auto_df.groupBy("cylinders").agg({"horsepower": "avg"})

df = auto_df.join(manufacturer_avg, "cylinders").select(

*unmodified_columns,

coalesce("horsepower", "avg(horsepower)").alias("horsepower"),

)

# Code snippet result:

+----+---------+------------+------+------------+---------+------+----------+----------+

| mpg|cylinders|displacement|weight|acceleration|modelyear|origin| carname|horsepower|

+----+---------+------------+------+------------+---------+------+----------+----------+

|18.0| 8| 307.0| 3504.| 12.0| 70| 1|chevrol...| 130.0|

|15.0| 8| 350.0| 3693.| 11.5| 70| 1|buick s...| 165.0|

|18.0| 8| 318.0| 3436.| 11.0| 70| 1|plymout...| 150.0|

|16.0| 8| 304.0| 3433.| 12.0| 70| 1|amc reb...| 150.0|

|17.0| 8| 302.0| 3449.| 10.5| 70| 1|ford to...| 140.0|

|15.0| 8| 429.0| 4341.| 10.0| 70| 1|ford ga...| 198.0|

|14.0| 8| 454.0| 4354.| 9.0| 70| 1|chevrol...| 220.0|

|14.0| 8| 440.0| 4312.| 8.5| 70| 1|plymout...| 215.0|

|14.0| 8| 455.0| 4425.| 10.0| 70| 1|pontiac...| 225.0|

|15.0| 8| 390.0| 3850.| 8.5| 70| 1|amc amb...| 190.0|

+----+---------+------------+------+------------+---------+------+----------+----------+

only showing top 10 rows

Unpack a DataFrame's JSON column to a new DataFrame

from pyspark.sql.functions import col, json_tuple

source = spark.sparkContext.parallelize(

[["1", '{ "a" : 10, "b" : 11 }'], ["2", '{ "a" : 20, "b" : 21 }']]

).toDF(["id", "json"])

df = source.select("id", json_tuple(col("json"), "a", "b"))

# Code snippet result:

+---+---+---+

| id| c0| c1|

+---+---+---+

| 1| 10| 11|

| 2| 20| 21|

+---+---+---+

Query a JSON column

If you have JSON text data embedded in a String column, json_tuple will parse that text and extract fields within the JSON text.

from pyspark.sql.functions import col, json_tuple

source = spark.sparkContext.parallelize(

[["1", '{ "a" : 10, "b" : 11 }'], ["2", '{ "a" : 20, "b" : 21 }']]

).toDF(["id", "json"])

df = (

source.select("id", json_tuple(col("json"), "a", "b"))

.withColumnRenamed("c0", "a")

.withColumnRenamed("c1", "b")

.where(col("b") > 15)

)

# Code snippet result:

+---+---+---+

| id| a| b|

+---+---+---+

| 2| 20| 21|

+---+---+---+

Sorting and Searching

Filtering, sorting, removing duplicates and more.

Filter a column using a condition

from pyspark.sql.functions import col

df = auto_df.filter(col("mpg") > "30")

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

| 9.0| 8| 304.0| 193.0| 4732.| 18.5| 70| 1| hi 1200d|

|30.0| 4| 79.00| 70.00| 2074.| 19.5| 71| 2|peugeot...|

|30.0| 4| 88.00| 76.00| 2065.| 14.5| 71| 2| fiat 124b|

|31.0| 4| 71.00| 65.00| 1773.| 19.0| 71| 3|toyota ...|

|35.0| 4| 72.00| 69.00| 1613.| 18.0| 71| 3|datsun ...|

|31.0| 4| 79.00| 67.00| 1950.| 19.0| 74| 3|datsun ...|

|32.0| 4| 71.00| 65.00| 1836.| 21.0| 74| 3|toyota ...|

|31.0| 4| 76.00| 52.00| 1649.| 16.5| 74| 3|toyota ...|

|32.0| 4| 83.00| 61.00| 2003.| 19.0| 74| 3|datsun 710|

|31.0| 4| 79.00| 67.00| 2000.| 16.0| 74| 2| fiat x1.9|

+----+---------+------------+----------+------+------------+---------+------+----------+

only showing top 10 rows

Filter based on a specific column value

from pyspark.sql.functions import col

df = auto_df.where(col("cylinders") == "8")

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

|18.0| 8| 307.0| 130.0| 3504.| 12.0| 70| 1|chevrol...|

|15.0| 8| 350.0| 165.0| 3693.| 11.5| 70| 1|buick s...|

|18.0| 8| 318.0| 150.0| 3436.| 11.0| 70| 1|plymout...|

|16.0| 8| 304.0| 150.0| 3433.| 12.0| 70| 1|amc reb...|

|17.0| 8| 302.0| 140.0| 3449.| 10.5| 70| 1|ford to...|

|15.0| 8| 429.0| 198.0| 4341.| 10.0| 70| 1|ford ga...|

|14.0| 8| 454.0| 220.0| 4354.| 9.0| 70| 1|chevrol...|

|14.0| 8| 440.0| 215.0| 4312.| 8.5| 70| 1|plymout...|

|14.0| 8| 455.0| 225.0| 4425.| 10.0| 70| 1|pontiac...|

|15.0| 8| 390.0| 190.0| 3850.| 8.5| 70| 1|amc amb...|

+----+---------+------------+----------+------+------------+---------+------+----------+

only showing top 10 rows

Filter based on an IN list

from pyspark.sql.functions import col

df = auto_df.where(col("cylinders").isin(["4", "6"]))

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

|24.0| 4| 113.0| 95.00| 2372.| 15.0| 70| 3|toyota ...|

|22.0| 6| 198.0| 95.00| 2833.| 15.5| 70| 1|plymout...|

|18.0| 6| 199.0| 97.00| 2774.| 15.5| 70| 1|amc hornet|

|21.0| 6| 200.0| 85.00| 2587.| 16.0| 70| 1|ford ma...|

|27.0| 4| 97.00| 88.00| 2130.| 14.5| 70| 3|datsun ...|

|26.0| 4| 97.00| 46.00| 1835.| 20.5| 70| 2|volkswa...|

|25.0| 4| 110.0| 87.00| 2672.| 17.5| 70| 2|peugeot...|

|24.0| 4| 107.0| 90.00| 2430.| 14.5| 70| 2|audi 10...|

|25.0| 4| 104.0| 95.00| 2375.| 17.5| 70| 2| saab 99e|

|26.0| 4| 121.0| 113.0| 2234.| 12.5| 70| 2| bmw 2002|

+----+---------+------------+----------+------+------------+---------+------+----------+

only showing top 10 rows

Filter based on a NOT IN list

from pyspark.sql.functions import col

df = auto_df.where(~col("cylinders").isin(["4", "6"]))

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

|18.0| 8| 307.0| 130.0| 3504.| 12.0| 70| 1|chevrol...|

|15.0| 8| 350.0| 165.0| 3693.| 11.5| 70| 1|buick s...|

|18.0| 8| 318.0| 150.0| 3436.| 11.0| 70| 1|plymout...|

|16.0| 8| 304.0| 150.0| 3433.| 12.0| 70| 1|amc reb...|

|17.0| 8| 302.0| 140.0| 3449.| 10.5| 70| 1|ford to...|

|15.0| 8| 429.0| 198.0| 4341.| 10.0| 70| 1|ford ga...|

|14.0| 8| 454.0| 220.0| 4354.| 9.0| 70| 1|chevrol...|

|14.0| 8| 440.0| 215.0| 4312.| 8.5| 70| 1|plymout...|

|14.0| 8| 455.0| 225.0| 4425.| 10.0| 70| 1|pontiac...|

|15.0| 8| 390.0| 190.0| 3850.| 8.5| 70| 1|amc amb...|

+----+---------+------------+----------+------+------------+---------+------+----------+

only showing top 10 rows

Filter values based on keys in another DataFrame

If you have DataFrame 1 containing values you want to remove from DataFrame 2, join them using the left_anti join strategy.

from pyspark.sql.functions import col

# Our DataFrame of keys to exclude.

exclude_keys = auto_df.select(

(col("modelyear") + 1).alias("adjusted_year")

).distinct()

# The anti join returns only keys with no matches.

filtered = auto_df.join(

exclude_keys,

how="left_anti",

on=auto_df.modelyear == exclude_keys.adjusted_year,

)

# Alternatively we can register a temporary table and use a SQL expression.

exclude_keys.registerTempTable("exclude_keys")

df = auto_df.filter(

"modelyear not in ( select adjusted_year from exclude_keys )"

)

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

|18.0| 8.0| 307.0| 130.0|3504.0| 12.0| 70| 1|chevrol...|

|15.0| 8.0| 350.0| 165.0|3693.0| 11.5| 70| 1|buick s...|

|18.0| 8.0| 318.0| 150.0|3436.0| 11.0| 70| 1|plymout...|

|16.0| 8.0| 304.0| 150.0|3433.0| 12.0| 70| 1|amc reb...|

|17.0| 8.0| 302.0| 140.0|3449.0| 10.5| 70| 1|ford to...|

|15.0| 8.0| 429.0| 198.0|4341.0| 10.0| 70| 1|ford ga...|

|14.0| 8.0| 454.0| 220.0|4354.0| 9.0| 70| 1|chevrol...|

|14.0| 8.0| 440.0| 215.0|4312.0| 8.5| 70| 1|plymout...|

|14.0| 8.0| 455.0| 225.0|4425.0| 10.0| 70| 1|pontiac...|

|15.0| 8.0| 390.0| 190.0|3850.0| 8.5| 70| 1|amc amb...|

+----+---------+------------+----------+------+------------+---------+------+----------+

only showing top 10 rows

Get Dataframe rows that match a substring

df = auto_df.where(auto_df.carname.contains("custom"))

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

|16.0| 6| 225.0| 105.0| 3439.| 15.5| 71| 1|plymout...|

|13.0| 8| 350.0| 155.0| 4502.| 13.5| 72| 1|buick l...|

|14.0| 8| 318.0| 150.0| 4077.| 14.0| 72| 1|plymout...|

|15.0| 8| 318.0| 150.0| 3777.| 12.5| 73| 1|dodge c...|

|12.0| 8| 455.0| 225.0| 4951.| 11.0| 73| 1|buick e...|

|16.0| 6| 250.0| 100.0| 3278.| 18.0| 73| 1|chevrol...|

|13.0| 8| 360.0| 170.0| 4654.| 13.0| 73| 1|plymout...|

|15.0| 8| 318.0| 150.0| 3399.| 11.0| 73| 1|dodge d...|

|14.0| 8| 318.0| 150.0| 4457.| 13.5| 74| 1|dodge c...|

|19.0| 6| 225.0| 95.00| 3264.| 16.0| 75| 1|plymout...|

+----+---------+------------+----------+------+------------+---------+------+----------+

only showing top 10 rows

Filter a Dataframe based on a custom substring search

from pyspark.sql.functions import col

df = auto_df.where(col("carname").like("%custom%"))

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

|16.0| 6| 225.0| 105.0| 3439.| 15.5| 71| 1|plymout...|

|13.0| 8| 350.0| 155.0| 4502.| 13.5| 72| 1|buick l...|

|14.0| 8| 318.0| 150.0| 4077.| 14.0| 72| 1|plymout...|

|15.0| 8| 318.0| 150.0| 3777.| 12.5| 73| 1|dodge c...|

|12.0| 8| 455.0| 225.0| 4951.| 11.0| 73| 1|buick e...|

|16.0| 6| 250.0| 100.0| 3278.| 18.0| 73| 1|chevrol...|

|13.0| 8| 360.0| 170.0| 4654.| 13.0| 73| 1|plymout...|

|15.0| 8| 318.0| 150.0| 3399.| 11.0| 73| 1|dodge d...|

|14.0| 8| 318.0| 150.0| 4457.| 13.5| 74| 1|dodge c...|

|19.0| 6| 225.0| 95.00| 3264.| 16.0| 75| 1|plymout...|

+----+---------+------------+----------+------+------------+---------+------+----------+

only showing top 10 rows

Filter based on a column's length

from pyspark.sql.functions import col, length

df = auto_df.where(length(col("carname")) < 12)

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

|17.0| 8| 302.0| 140.0| 3449.| 10.5| 70| 1|ford to...|

|18.0| 6| 199.0| 97.00| 2774.| 15.5| 70| 1|amc hornet|

|25.0| 4| 110.0| 87.00| 2672.| 17.5| 70| 2|peugeot...|

|24.0| 4| 107.0| 90.00| 2430.| 14.5| 70| 2|audi 10...|

|25.0| 4| 104.0| 95.00| 2375.| 17.5| 70| 2| saab 99e|

|26.0| 4| 121.0| 113.0| 2234.| 12.5| 70| 2| bmw 2002|

|21.0| 6| 199.0| 90.00| 2648.| 15.0| 70| 1|amc gre...|

|10.0| 8| 360.0| 215.0| 4615.| 14.0| 70| 1| ford f250|

|10.0| 8| 307.0| 200.0| 4376.| 15.0| 70| 1| chevy c20|

|11.0| 8| 318.0| 210.0| 4382.| 13.5| 70| 1|dodge d200|

+----+---------+------------+----------+------+------------+---------+------+----------+

only showing top 10 rows

Multiple filter conditions

The key thing to remember if you have multiple filter conditions is that filter accepts standard Python expressions. Use bitwise operators to handle and/or conditions.

from pyspark.sql.functions import col

# OR

df = auto_df.filter((col("mpg") > "30") | (col("acceleration") < "10"))

# AND

df = auto_df.filter((col("mpg") > "30") & (col("acceleration") < "13"))

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

|32.7| 6| 168.0| 132.0| 2910.| 11.4| 80| 3|datsun ...|

|30.0| 4| 135.0| 84.00| 2385.| 12.9| 81| 1|plymout...|

|32.0| 4| 135.0| 84.00| 2295.| 11.6| 82| 1|dodge r...|

+----+---------+------------+----------+------+------------+---------+------+----------+

Sort DataFrame by a column

from pyspark.sql.functions import col

df = auto_df.orderBy("carname")

df = auto_df.orderBy(col("carname").desc())

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

|31.9| 4| 89.00| 71.00| 1925.| 14.0| 79| 2|vw rabb...|

|44.3| 4| 90.00| 48.00| 2085.| 21.7| 80| 2|vw rabb...|

|29.0| 4| 90.00| 70.00| 1937.| 14.2| 76| 2| vw rabbit|

|41.5| 4| 98.00| 76.00| 2144.| 14.7| 80| 2| vw rabbit|

|44.0| 4| 97.00| 52.00| 2130.| 24.6| 82| 2| vw pickup|

|43.4| 4| 90.00| 48.00| 2335.| 23.7| 80| 2|vw dash...|

|30.7| 6| 145.0| 76.00| 3160.| 19.6| 81| 2|volvo d...|

|17.0| 6| 163.0| 125.0| 3140.| 13.6| 78| 2|volvo 2...|

|20.0| 4| 130.0| 102.0| 3150.| 15.7| 76| 2| volvo 245|

|22.0| 4| 121.0| 98.00| 2945.| 14.5| 75| 2|volvo 2...|

+----+---------+------------+----------+------+------------+---------+------+----------+

only showing top 10 rows

Take the first N rows of a DataFrame

n = 10

df = auto_df.limit(n)

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

|18.0| 8| 307.0| 130.0| 3504.| 12.0| 70| 1|chevrol...|

|15.0| 8| 350.0| 165.0| 3693.| 11.5| 70| 1|buick s...|

|18.0| 8| 318.0| 150.0| 3436.| 11.0| 70| 1|plymout...|

|16.0| 8| 304.0| 150.0| 3433.| 12.0| 70| 1|amc reb...|

|17.0| 8| 302.0| 140.0| 3449.| 10.5| 70| 1|ford to...|

|15.0| 8| 429.0| 198.0| 4341.| 10.0| 70| 1|ford ga...|

|14.0| 8| 454.0| 220.0| 4354.| 9.0| 70| 1|chevrol...|

|14.0| 8| 440.0| 215.0| 4312.| 8.5| 70| 1|plymout...|

|14.0| 8| 455.0| 225.0| 4425.| 10.0| 70| 1|pontiac...|

|15.0| 8| 390.0| 190.0| 3850.| 8.5| 70| 1|amc amb...|

+----+---------+------------+----------+------+------------+---------+------+----------+

Get distinct values of a column

df = auto_df.select("cylinders").distinct()

# Code snippet result:

+---------+

|cylinders|

+---------+

| 3|

| 8|

| 5|

| 6|

| 4|

+---------+

Remove duplicates

df = auto_df.dropDuplicates(["carname"])

# Code snippet result:

+----+---------+------------+----------+------+------------+---------+------+----------+

| mpg|cylinders|displacement|horsepower|weight|acceleration|modelyear|origin| carname|

+----+---------+------------+----------+------+------------+---------+------+----------+

|13.0| 8| 360.0| 175.0| 3821.| 11.0| 73| 1|amc amb...|

|15.0| 8| 390.0| 190.0| 3850.| 8.5| 70| 1|amc amb...|

|17.0| 8| 304.0| 150.0| 3672.| 11.5| 72| 1|amc amb...|

|19.4| 6| 232.0| 90.00| 3210.| 17.2| 78| 1|amc con...|

|18.1| 6| 258.0| 120.0| 3410.| 15.1| 78| 1|amc con...|

|23.0| 4| 151.0| null| 3035.| 20.5| 82| 1|amc con...|

|20.2| 6| 232.0| 90.00| 3265.| 18.2| 79| 1|amc con...|

|21.0| 6| 199.0| 90.00| 2648.| 15.0| 70| 1|amc gre...|

|18.0| 6| 199.0| 97.00| 2774.| 15.5| 70| 1|amc hornet|

|18.0| 6| 258.0| 110.0| 2962.| 13.5| 71| 1|amc hor...|

+----+---------+------------+----------+------+------------+---------+------+----------+

only showing top 10 rows

Grouping

Group DataFrame data by key to perform aggregates like counting, sums, averages, etc.

count(*) on a particular column

from pyspark.sql.functions import desc

# No sorting.

df = auto_df.groupBy("cylinders").count()

# With sorting.

df = auto_df.groupBy("cylinders").count().orderBy(desc("count"))

# Code snippet result:

+---------+-----+

|cylinders|count|

+---------+-----+

| 4| 204|

| 8| 103|

| 6| 84|

| 3| 4|

| 5| 3|

+---------+-----+

Group and sort

from pyspark.sql.functions import avg, desc

df = (

auto_df.groupBy("cylinders")

.agg(avg("horsepower").alias("avg_horsepower"))

.orderBy(desc("avg_horsepower"))

)

# Code snippet result:

+---------+--------------+

|cylinders|avg_horsepower|

+---------+--------------+

| 8| 158.300...|

| 6| 101.506...|

| 3| 99.25|

| 5| 82.3333...|

| 4| 78.2814...|

+---------+--------------+

Filter groups based on an aggregate value, equivalent to SQL HAVING clause

To filter values after an aggregation simply use .filter on the DataFrame after the aggregate, using the column name the aggregate generates.

from pyspark.sql.functions import col, desc

df = (

auto_df.groupBy("cylinders")

.count()

.orderBy(desc("count"))

.filter(col("count") > 100)

)

# Code snippet result:

+---------+-----+

|cylinders|count|